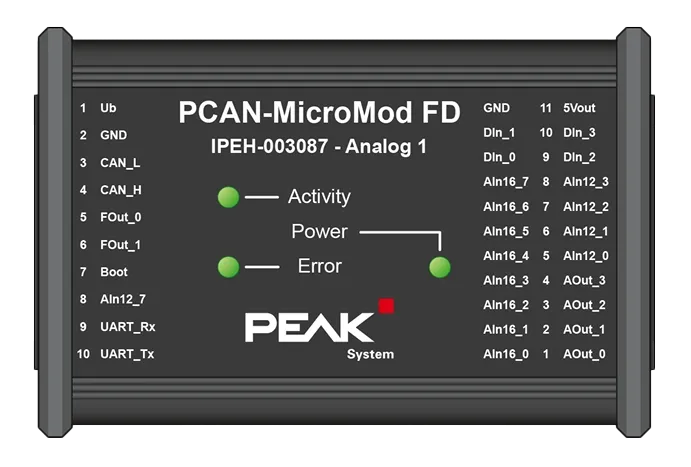

【虹科方案】 安鵬精密實測 – NVH 路測中零開發實現 CAN 訊號同步

安鵬精密於實際 NVH 路測中,透過虹科 PCAN-MicroMod FD,將車輛 CAN / CAN FD 訊號即時轉換為類比電壓,無需二次開發即可完成訊號同步,低成本整合既有 NVH 資料採集系統。

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Caching is a technology that temporarily stores data in memory to reduce the number of data queries and increase system response time. When the caching mechanism fails or the policy is outdated, client requests are forced to access the main database directly, resulting in a delay from milliseconds to hundreds of milliseconds or even seconds. With an efficient caching system, the system can respond in milliseconds, significantly improving real-time computing and user experience.

Caching plays a key role in modern system acceleration. As application architectures become more complex and data volumes grow exponentially, the need for high-performance data access continues to rise.

as early as 2013The study showed that for every increase in 100 millisecond delayThe average conversion rate of retail websites decreased by 7.25%To To 2024The average loading time of desktop web pages has been reduced to around 1,000 hours. 2.5 secondsThis reflects the fact that users' expectations of a smooth digital experience are much higher than ever before.

Nowadays, caching is not only the core means to reduce latency, but also an important cornerstone to ensure the stability of interactions and enhance the system firmware.

However, the challenge of modern caching is thatHigh complexity of the system architectureWith the popularity of decentralized architectures and technologies such as AI and cloud-native As decentralized architectures and technologies such as AI and cloud-native become commonplace, the

Cache consistency and invalidation are one of the most difficult parts of performance optimization. Therefore, when choosing a caching framework, organizations must consider the followingScalability, Data Consistency and MaintainabilityThe balance is struck between the two.

Between the application layer and the data layer, caching dramatically accelerates frequent queries (e.g., query results, session data, calculation results, etc.). It can effectively reduce database load and increase system throughput, and its core values include:

Take retail e-commerce as an example, when users browse or checkout, Cache can instantly save the calculation results such as region and tax rate, avoiding the need to repeatedly query the database, significantly improving transaction efficiency and reducing load.

When the system shows the following phenomena, it means that the existing caching strategy may no longer be able to meet the actual demand:

Different business scenarios require different caching strategies, and the core difference lies in the followingCache miss handling,Data updating mechanismandConsistency controlThe following are some typical caching strategies. The following are some typical caching strategies:

Cache automatically loads data and returns it to the user when the data is not hit. Suitable forread too much and write too little,Stabilized access modeThe scene, such as commodity static information.

Synchronizing cache and database updates with each write operation ensures data consistency, but may cause write delays. Commonly used inFinancial Trading SystemThe data is used in applications that require high data accuracy.

The application manages the cache lifecycle itself, reading from the database and writing to the cache on the first query. Highly flexible but slightly delayed on first visit, suitable forLow Frequency Update(e.g., profile or static configuration).

Write caching first, then update the database in an asynchronous way to improve write performance but risk data loss. Mostly used inJournal Collection and Data AggregationThe non-temporal scenes such as

Set the Time-To-Live for the cache data and expire it automatically. Commonly used inStatic or semi-static resources(e.g. website CSS/JS files).

Proactively update caches after detecting database changes to ensure data consistency. Can be paired with Redis Data Integration (RDI) Realize real-time synchronization and cache preheating.

Traditional caching is mostly focused on read acceleration, but in theWrite-heavy or mixed loadsIn environments such as financial transaction systems, cache optimization needs to redefine its value.

Actual cases include:

Real-time vehicle tracking system: Persistent caching is required to retain state data and ensure stability.

Financial market aggregation platform: Push market data in milliseconds to minimize the impact of delays on trading decisions.

Deutsche Börse (Deutsche Börse Group) is used to Redis Smart Cache TechnologyThe company has been successful in meeting stringent latency and regulatory requirements. Its head of IT Maja Schwob Indicates:

"The real-time data processing power of Redis is at the heart of our high-frequency transaction reporting system."

A robust caching strategy requires continuous monitoring and visualization. Below are the key metrics for evaluating cache performance:

cache hit rate: Reflects the actual effectiveness of the cache.

Drive Rate/Refill Rate: A measure of how well the cache capacity matches the heat of the data.

Delayed Response: Observe end-to-end performance stability.

error rate: Monitor connection timeouts, exceptions and failures.

combinable Prometheus + Grafana Establishment of a visualization and control system through OpenTelemetry Decentralized tracking for immediate detection of cache leakage, data expiration or chain failure.

Compared to the traditional memory-based database, theRedis Provides enterprise-class decentralized caching solutions with high reliability and scalability:

For example, an international advertising technology company through Redis Support over 50+ MicroservicesRedis has been proven stable and reliable in high-consumption environments by processing tens of millions of requests per day.

The design of the caching strategy determines the balance between system performance and cost. When there is a database bottleneck or user experience degradation, the caching architecture should be re-evaluated and optimized in the following three dimensions:

Choose the right combination of caching strategies based on business characteristics;

Establishment of a whole-link observable and monitoring system;

Replaces native solutions with professional-grade caching brokers such as Redis.

安鵬精密於實際 NVH 路測中,透過虹科 PCAN-MicroMod FD,將車輛 CAN / CAN FD 訊號即時轉換為類比電壓,無需二次開發即可完成訊號同步,低成本整合既有 NVH 資料採集系統。

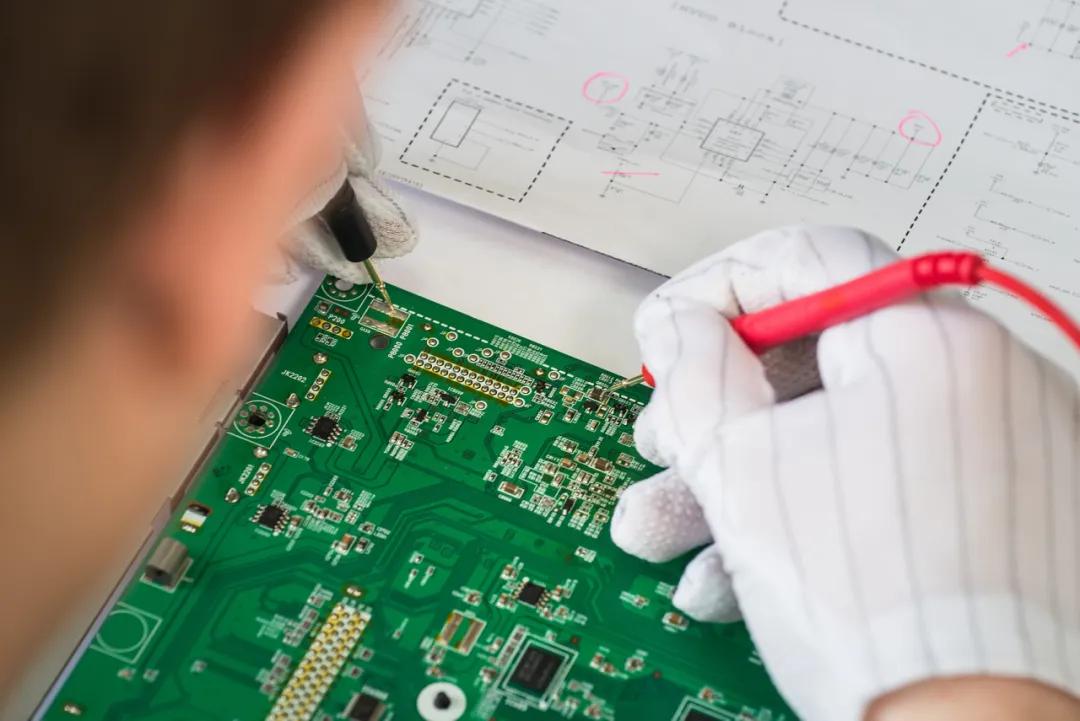

虹科結合 AR 智慧眼鏡與 AI 辨識技術,打造標準化 PCB 質檢工作流程,整合 MES、ERP、AOI 系統,降低漏檢率、提升良率,加速電子製造數位化升級。

深入解析虹科 GNSS 模擬器如何支援無人機整機測試,涵蓋多星座 GNSS 仿真、RTK 公分級定位、抗干擾測試與多感測器融合驗證,助力高效、安全的 UAV 研發。