How to Identify Invisible Energy Wastage in Buildings - Panorama Intelligent Energy Management

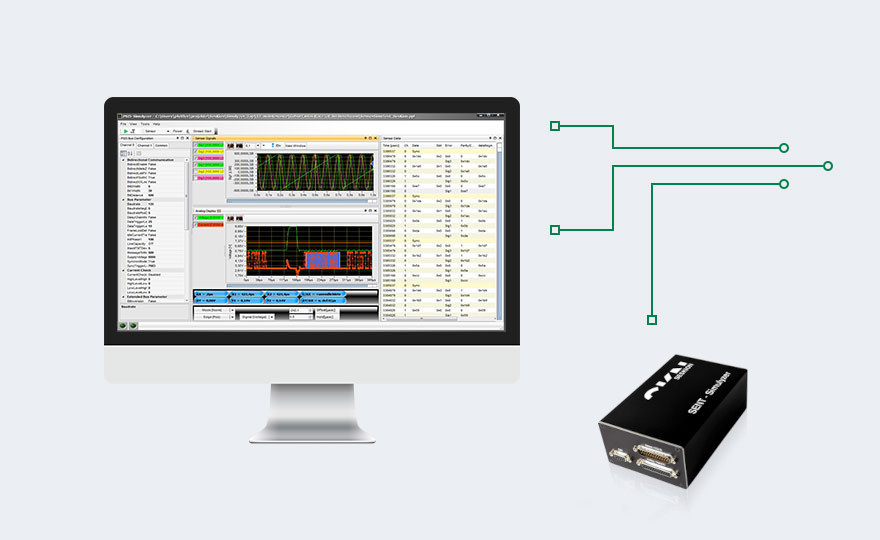

The Energy SCADA system helps organizations identify invisible energy wastage in buildings through BMS building management, intelligent sensing, energy monitoring and data analysis to improve energy efficiency and meet energy saving and emission reduction requirements. Learn how Panorama SCADA enables smart buildings and efficient energy management.