【虹科方案】從被動防禦到主動預防:用 KnowBe4 輕鬆應對關鍵基礎設施條例風險評估與審核

KnowBe4 為企業應對香港《關鍵基礎設施保護條例》提供了化繁為簡的解決方案。面對第 24 條與第 25 條的嚴格挑戰,它將難以量化的「人為風險」轉變為可追蹤的實戰數據,不僅彌補了傳統評估的盲點,更為年度審核提供了證明控制措施「有效運作」的鐵證。透過自動化報告與持續演練,企業能在大幅降低安全風險的同時,輕鬆滿足監管要求,實現從「被動合規」到「主動防禦」的關鍵轉型。

Generative AI is changing the game, redefining the future of creativity, automation, and even cybersecurity. Models like GPT-4 and DeepSeek can generate human-like text, beautiful images, and software code, opening up a whole new world of possibilities for businesses and individuals. However, with great power comes great risk. Cybersecurity experts are increasingly concerned about generative AI, not only because of its technological breakthroughs, but also because of the potential security risks it poses. In this article, we explore the complexities of generative AI, including how it works, the security risks, and how organizations can effectively mitigate them.

These technologies have been widely used in media, design, medical, content creation and software development, dramatically improving productivity. However, the development of generative AI also brings new challenges and risks.

Generative AI presents tremendous opportunities, but it also poses a host of cybersecurity threats. From data breaches to AI-generated speech and Deepfake, the technology poses significant risks to businesses and government agencies. Here are some of the key security risks that generative AI can pose:

One of the most serious problems facing generative AI is data leakage. Since these models are trained on massive datasets, they may inadvertently reproduce sensitive information from the training data, thereby violating user privacy. For example, OpenAI has stated that large language models may inadvertently expose input data, which may contain personally identifiable information (PII), at 1-2%. For industries that are subject to stringent data regulation, such as the medical or financial fields, a data breach could result in significant financial loss or reputational damage.

Cybercriminals can use generative AI to build malicious text, including malware and ransomware scripts. Some attackers have begun using GPT to generate sophisticated phishing emails and even write attack code directly, lowering the technical barriers to hacking. According to CheckPoint, advanced persistent threat (APT) organizations have begun using AI-generated phishing scripts to evade detection by traditional security tools.

In a model inversion attack, an attacker can access an AI model to infer or recover the model's training data. This can lead to the disclosure of sensitive (or even anonymized) data, which, once in the hands of cybercriminals, could allow them to gain access to proprietary algorithms or users' personal information. For example, Securiti researchers have demonstrated that in the absence of security, an attacker can extract private information through a generative AI model.

According to a study by PricewaterhouseCoopers (PwC), by 2026, deep counterfeiting technologies could cause up to US$250 millionThe main source of losses is fraud and misinformation.

In the face of current and future AI security challenges, businesses and organizations must adopt a comprehensive security strategy to address the risks that generative AI can bring. Here are some key mitigating measures:

Data cleansing is one of the best ways to minimize the risk of data leakage from AI training. Organizations should clean their data sets to remove all identifiable personal information before using the data to prevent AI models from inadvertently revealing sensitive data. In addition, data protection can be further enhanced by Differential Privacy, which ensures that models do not expose a single user's data when generating content. Companies such as Google and Apple have already adopted Differential Privacy to protect user information in their large-scale AI models.

It is important to limit access to AI models. Enterprises can adopt role-based access control (RBAC) to ensure that only authorized users can use the AI system. In addition, the output data and training data generated by AI should be encrypted during transmission to prevent data theft or tampering during transmission.

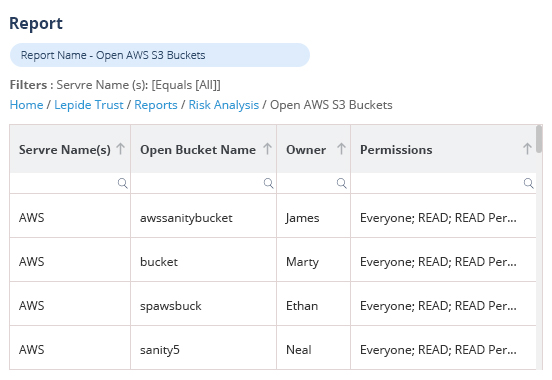

In the face of security challenges posed by generative AI.Lepide Data Security PlatformLepide provides a comprehensive and proactive solution to effectively mitigate the risks associated with Lepide's ability to monitor data interactions, user privileges and access activities in real-time, helping organizations detect and respond to suspicious behavior in a timely manner before a security threat occurs, and preventing security incidents from escalating into serious data breaches.

One of Lepide's core strengths is its ability to prevent unauthorized access and minimize the risk of unauthorized access. AI Driven EnvironmentThe risk of data leakage in the With detailed audit logs, organizations can track all changes to sensitive data, ensuring visibility and control over AI-related data usage.

In addition to security monitoring, Lepide has also developedCompliance ManagementIt plays a key role in automating compliance reports and providing customized security alerts. It automates the generation of compliance reports and provides customized security alerts to help organizations comply with GDPR, CCPA, HIPAA Stringent data privacy regulations, such as those in place, reduce the legal and economic risks associated with non-compliance and ensure that sensitive data is always strictly protected.

In addition, Lepide uses AI-Driven Anomaly Detection TechnologyThe company is able to recognize and respond to unusual data access patterns. This proactive defense strategy helps detect internal threats, AI abuse, or potential cyberattacks in a timely manner, ensuring that organizations can take action before a security incident occurs.

Through Integration Automated Risk Assessment, Sophisticated Access Control and Advanced Threat IntelligenceLepide enables organizations to adopt generative AI technologies with confidence while ensuring data security and compliance.

Generative AI is reshaping the future of technology, but the security risks it poses cannot be ignored. From data breaches to AI-generated malware, the threats are real and constantly evolving. The solution, however, is not to avoid AI, but rather to protect against it through Encryption, Surveillance and Ethical Governance We will take proactive measures to ensure the safe use of AI.

By combining strong security practices with human oversight, organizations can unlock the full potential of generative AI while maintaining security. The key is in the Innovation and Responsibility We've found a balance between this and the need to ensure that AI always adheres to security and ethical standards while driving technological advances.

KnowBe4 為企業應對香港《關鍵基礎設施保護條例》提供了化繁為簡的解決方案。面對第 24 條與第 25 條的嚴格挑戰,它將難以量化的「人為風險」轉變為可追蹤的實戰數據,不僅彌補了傳統評估的盲點,更為年度審核提供了證明控制措施「有效運作」的鐵證。透過自動化報告與持續演練,企業能在大幅降低安全風險的同時,輕鬆滿足監管要求,實現從「被動合規」到「主動防禦」的關鍵轉型。

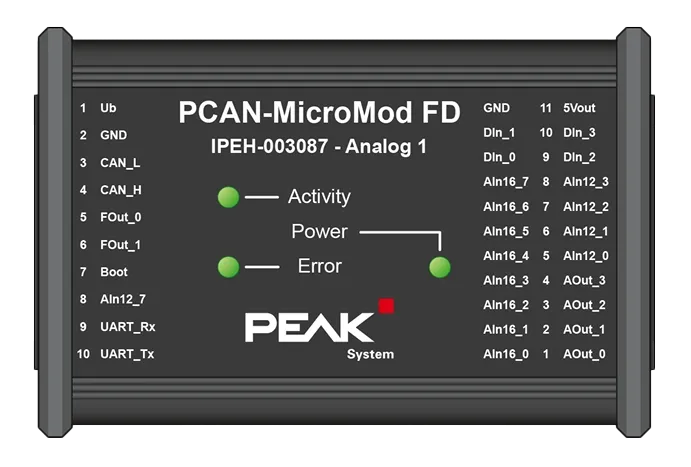

安鵬精密於實際 NVH 路測中,透過虹科 PCAN-MicroMod FD,將車輛 CAN / CAN FD 訊號即時轉換為類比電壓,無需二次開發即可完成訊號同步,低成本整合既有 NVH 資料採集系統。

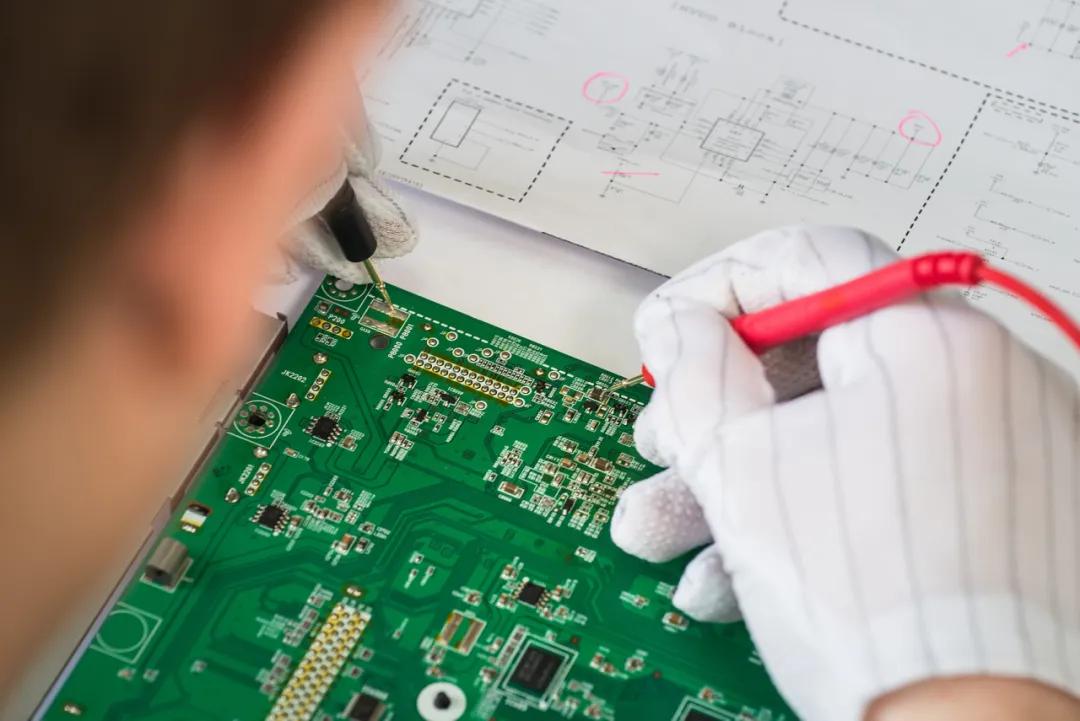

虹科結合 AR 智慧眼鏡與 AI 辨識技術,打造標準化 PCB 質檢工作流程,整合 MES、ERP、AOI 系統,降低漏檢率、提升良率,加速電子製造數位化升級。